Submitted by Alan Blackwell on Thu, 09/11/2023 - 15:49

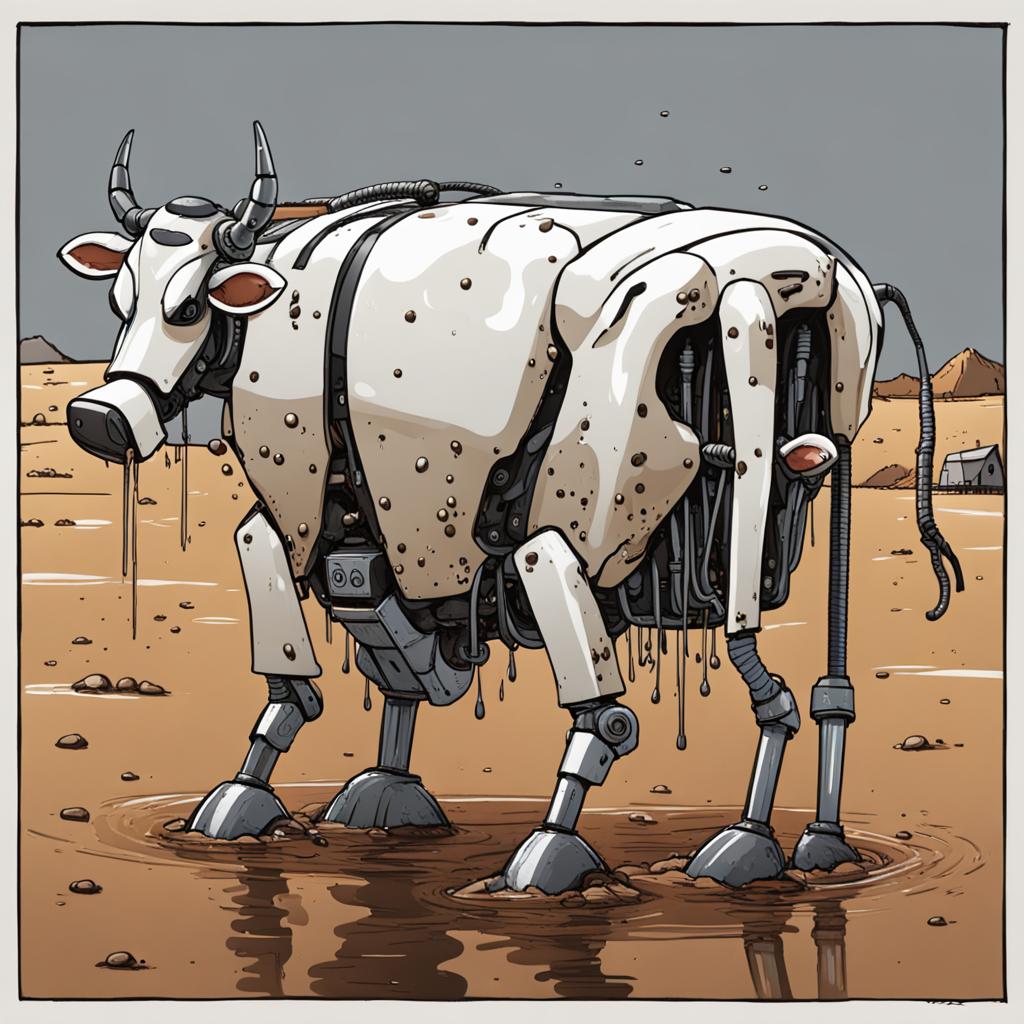

ChatGPT is a bullshit generator. To understand AI, we should think harder about bullshit

This post appears in a blog created in 2019 to focus on AI in Africa. Long before the release of ChatGPT, many wondered why AI would be relevant to Africans. But I’m writing in a week when US President Biden published an executive order on AI, and British PM Rishi Sunak listened enthusiastically to Elon Musk promising a magical AI future where nobody needs to work. When the richest man in the world talks up a storm with NATO leaders, Africa will get blown around in those political and economic winds.

Since my fieldwork in Africa, I’ve learned to ask different questions about AI, and in recent months, I’ve started to feel like the boy who questions the emperor’s new clothes. The problem I see, apparently not reported in coverage of Sunak’s AI Summit, is that AI literally produces bullshit. (Do I mean “literally”? My friends complain that I take everything literally, but I’m not a kleptomaniac).

MIT Professor of AI Rodney Brooks summarises the working principle of ChatGPT as “it just makes up stuff that sounds good”. This is mathematically accurate, where “sounds good” is an algorithm to imitate text found on the internet, while “makes up” is the basic randomness of relying on predictive text rather than logic or facts. Other leading researchers and professors of AI say the same things, with more technical detail, as in the famous “stochastic parrots” paper by Emily Bender, Timnit Gebru and their colleagues, or Murray Shanahan’s explanation of the text prediction principles (references below).

“Godfather of AI” Geoff Hinton, in recent public talks, explains that one of the greatest risks is not that chatbots will become super-intelligent, but that they will generate text that is super-persuasive without being intelligent, in the manner of Donald Trump or Boris Johnson. In a world where evidence and logic are not respected in public debate, Hinton imagines that systems operating without evidence or logic could become our overlords by becoming superhumanly persuasive, imitating and supplanting the worst kinds of political leader.

If this isn’t about evidence or logic, what is the scientific principle involved? Quite simply, we are talking about bullshit. Philosopher Harry Frankfurt, in his classic text On Bullshit, explains that the bullshitter “does not reject the authority of truth, as the liar does […] He pays no attention to it at all.” This is exactly what senior AI researchers such as Brooks, Bender, Shanahan and Hinton are telling us, when they explain how ChatGPT works. The problem, as Frankfurt explains, is that “[b]y virtue of this, bullshit is a greater enemy of the truth than lies are.” (p. 61). At a time when a public enquiry is reporting the astonishing behaviour of our most senior leaders during the Covid pandemic, the British people wonder how we came to elect such such bullshitters to lead us. But as Frankfurt observes, “Bullshit is unavoidable whenever circumstances require someone to talk without knowing what he is talking about” (p.63)

Perhaps this explains why the latest leader of the same government is so impressed by AI, and by billionaires promoting automated bullshit generators? But Frankfurt’s book is not the only classic text on bullshit. David Graeber’s famous analysis of Bullshit Jobs explains precisely why Elon Musk’s claim to the British PM is so revealing of the true nature of AI. Graeber revealed that over 30% of British workers believe their own job contributes nothing of any value to society. These are people who spend their lives writing pointless reports, relaying messages from one person to another, or listening to complaints they can do nothing about. Every part of their job could easily be done by ChatGPT.

Graeber observes that aspects of university education prepare young people to expect little more from life, training them to submit to bureaucratic processes, while writing reams of text that few will ever read. In the kind of education that produces a Boris Johnson, verbal fluency and entertaining arguments may be rewarded more highly than close attention to the truth. As Graeber says, we train people for bullshit jobs by training them to generate bullshit. So is Elon Musk right, that nobody will have to work any more once AI is achieved? Perhaps so, if producing bullshit is the only kind of work we need - and you can see how the owner of (eX)Twitter might see the world that way.

AI systems like ChatGPT are trained with text from Twitter, Facebook, Reddit, and other huge archives of bullshit, alongside plenty of actual facts (including Wikipedia and text ripped off from professional writers). But there is no algorithm in ChatGPT to check which parts are true. The output is literally bullshit, exactly as defined by philosopher Harry Frankfurt, and as we would expect from Graeber’s study of bullshit jobs. Just as Twitter encourages bullshitting politicians who don’t care whether what they say is true or false, the archives of what they have said can be used to train automatic bullshit generators.

The problem isn’t AI. What we need to regulate is the bullshit. Perhaps the next British PM should convene a summit on bullshit, to figure out whose jobs are worthwhile, and which ones we could happily lose?

If you'd like to read more, my new book on Designing Alternatives to AI, to be published by MIT Press in 2024, is available as an online free preview.

References

Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell, "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?" in Proceedings of the 2021 ACM conference on Fairness, Accountability, and Transparency (March 2021), 610-623.

Harry G. Frankfurt, On Bullshit. Princeton University Press, 2005.

David Graeber, Bullshit Jobs: A Theory. Penguin Books, 2019.

Murray Shanahan, Talking About Large Language Models. (2022). arXiv preprint arXiv:2212.03551.

Glenn Zorpette, "Just Calm Down About GPT-4 Already. And stop confusing performance with competence, says Rodney Brooks," IEEE Spectrum (17 May 2023).